Since the beginning of the full-scale war, Gwara Media team has been publishing a daily digest of fake news based on requests received via the Perevirka bot. Therefore, we have analyzed 298 cases based on the Daily Fakes section.

Daily Fakes is a newsletter of the most noteworthy pieces of disinformation detected while checking readers’ requests. On average, from May 1 till August 1, 2022, we received about 185 requests per day, with a peak load of 300-500 requests.

Generally, we processed 16,598 direct messages and identified 2,555 fake news and 1,148 manipulations.

The analytical report below is based on requests from May 1 to July 31.

Results

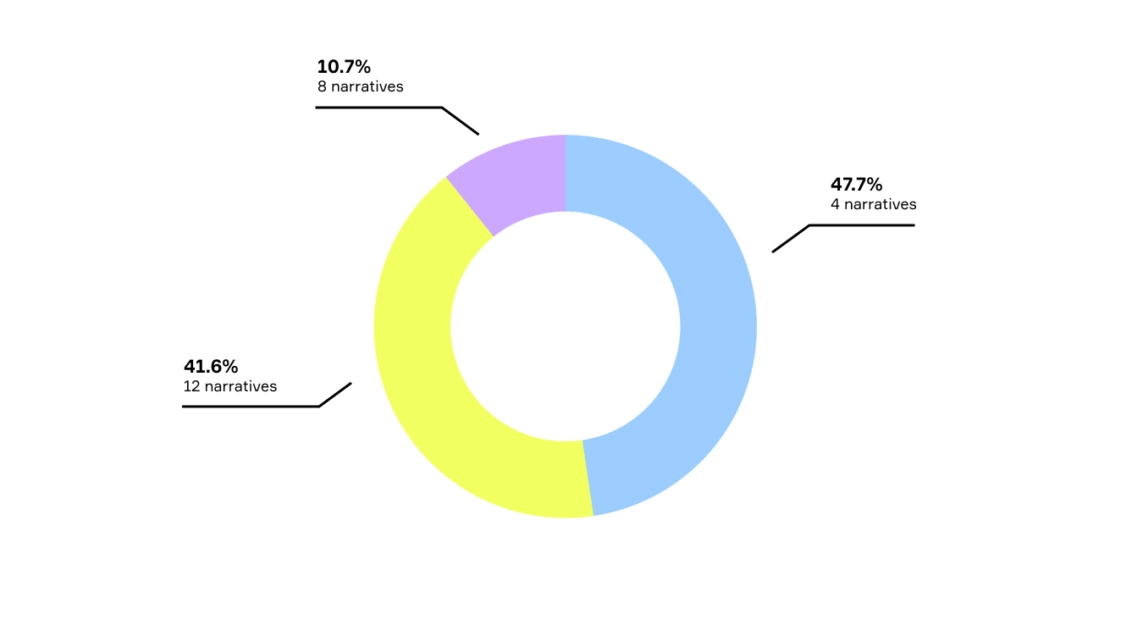

In course of the analysis, Gwara Media discerned 24 main narratives. For ease of reading, we divided them into three groups according to the number of unique fakes.

Group 1. The most common narratives

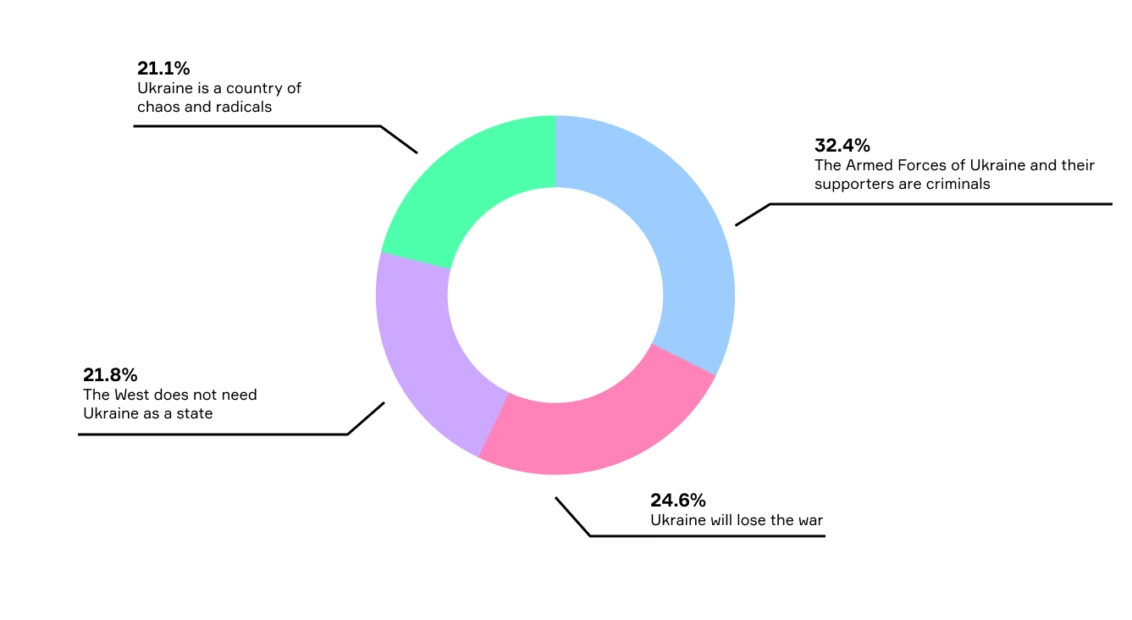

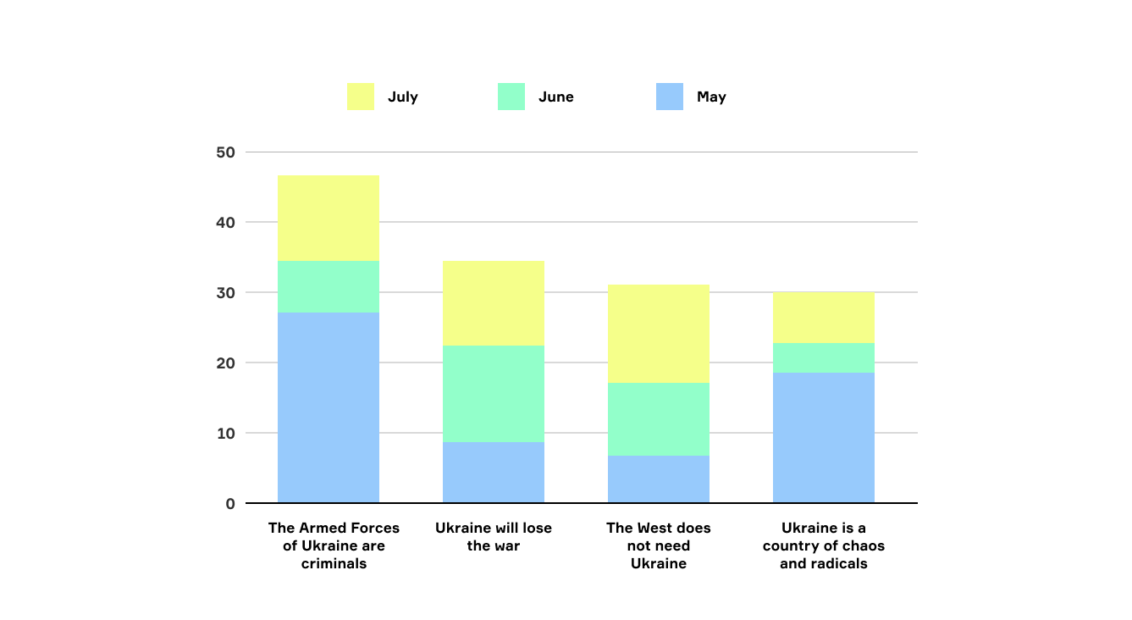

Detected in 142 pieces of disinformation, these 4 most common narratives are the target for our analysis:

- “The Armed Forces of Ukraine and their supporters are criminals” (32,4%).

- “Ukraine will lose the war” (24,6%).

- “The West does not need Ukraine as a state” (21,8%).

- “Ukraine is a country of chaos and radicals” (21,1%).

We presume these 4 narratives may be the most widespread elements of disinformation waves in Ukraine from May 1 to July 31. The chart below shows they continually appeared each month of the studied period.

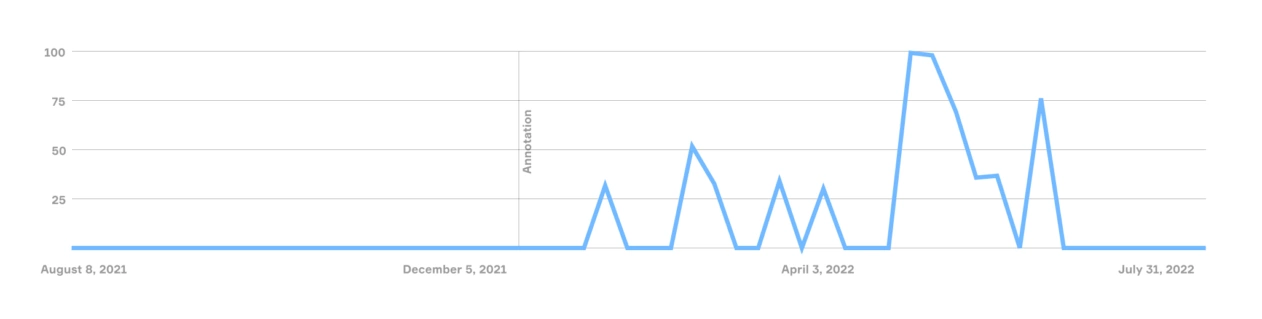

To prove that such a request classification is effective for detecting waves of disinformation, we will analyze two separate narratives and demonstrate how an increase in bot requests correlates with an increase of similar queries in Google.

Argument 1

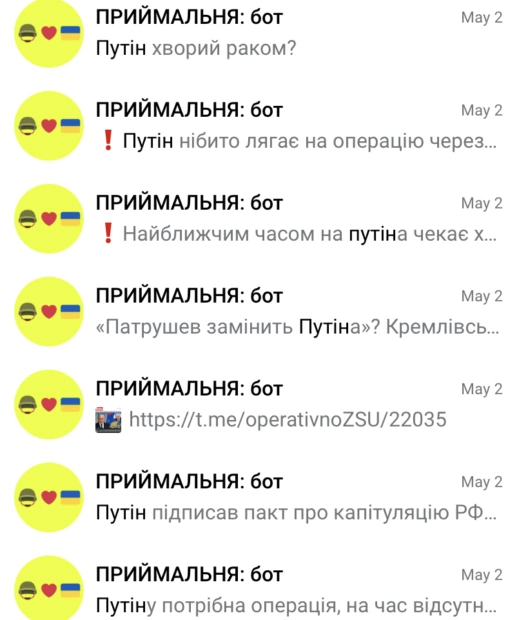

In early May, we received many requests to check the information about Putin’s possible surgery. Google Trends shows that similar queries appeared on Google too.

“Is Putin sick with cancer?”, “Putin is going to be operated…”, “Putin is soon to be in hospital…”

Google Trends shows that the most popular queries about Putin’s operation appeared at the beginning of May as well.

You can see from the above screenshot that our bot showed the same results; therefore, we may conclude this was a general trend.

Argument 2

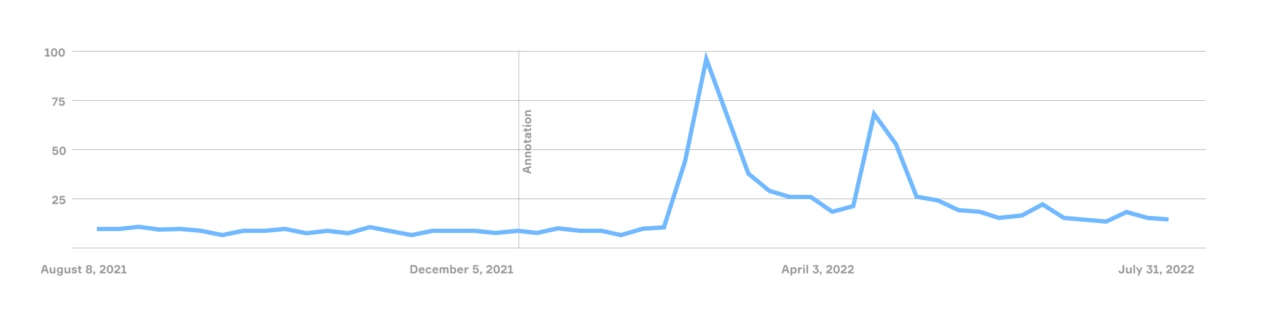

Another example of correlation is the probable Russian invasion of Moldova. We received similar requests from the end of April till the beginning of May.

“Invasion to Moldova”,

“Ukrainian residents in Moldova are called to leave the country…”

Google Trends demonstrates the same dynamics: such search queries were the most popular since the end of April.

Having analyzed these narratives, we can conclude that the chosen approach to detect waves of disinformation is effective.

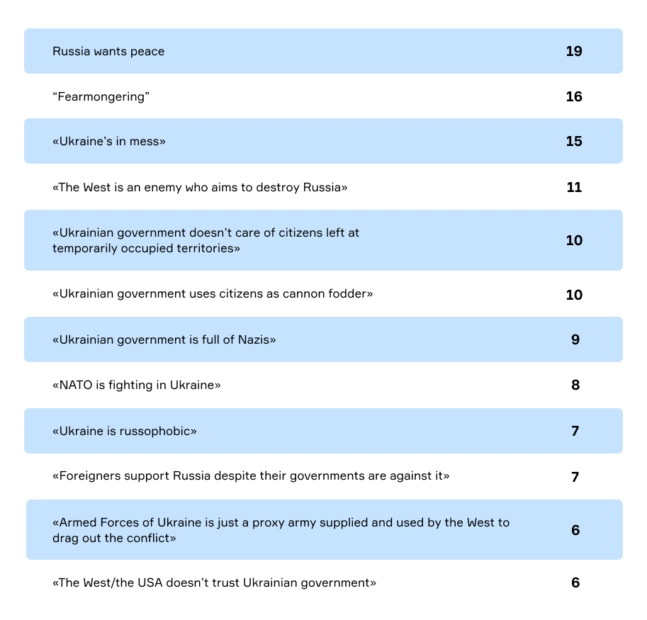

Group 2. Less common narratives

There are 12 less common narratives that we noticed among those requests we have received:

- “Russia wants peace / Putin will save us all” (15.3%).

- “Fearmongering” (12.9%).

- “Ukraine’s in mess” (12.1%).

- “The West is an enemy who aims to destroy Russia” (8.9%).

- “Ukrainian government doesn’t care of citizens left at temporarily occupied territories” (8.1%).

- “Ukrainian government uses citizens as cannon fodder” (8.1%).

- “Ukrainian government is full of Nazis” (7,3%).

- “NATO is fighting in Ukraine” (6,5%).

- “Ukraine is russophobic” (5,6%).

- “Foreigners support Russia despite their governments are against it” (5.6 %).

- “Armed Forces of Ukraine is just a proxy army supplied and used by the West to drag out the conflict” (4,8%).

- “The West/the USA doesn’t trust Ukrainian government” (4,8%).

We cannot qualify these messages as a general trend as we observed they were mostly targeted at Russian citizens and people living in temporarily occupied territories of Ukraine.

These statements form the necessary background noise for a propaganda-sensitive audience, accounting for a total of 124 unique cases.

Group 3. Infrequent narratives

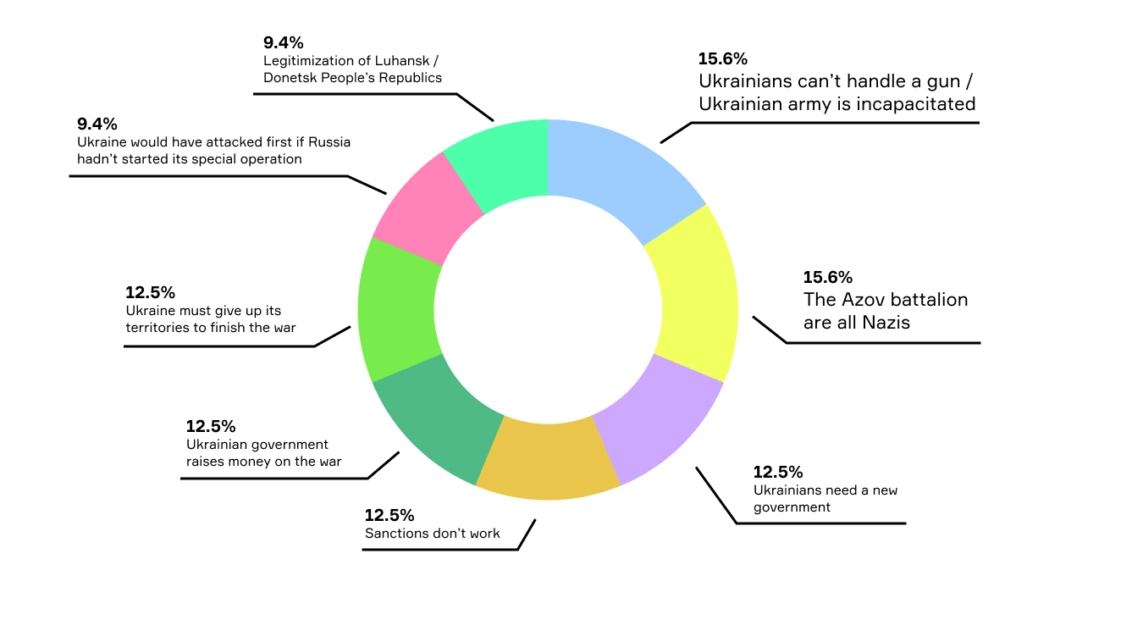

There are 8 narratives we identify as infrequent:

- “Ukrainians can’t handle a gun / Ukrainian army is incapacitated” (15.6%).

- “The Azov battalion are all Nazis” (15.6%).

- “Ukrainians need a new government” (12.5%).

- “Sanctions don’t work” (12.5%).

- “Ukrainian government raises money on the war” (12.5%).

- “Ukraine must give up its territories to finish the war” (12.5%).

- “Ukraine would have attacked first if Russia hadn’t started its special operation” (9.4%).

- “Legitimization of Luhansk / Donetsk People’s Republics” (9.4%).

Based on this research, we can conclude that these fakes are “surgical strikes” meant to support the rhetoric of the Russian Federation; they account for 32 unique cases.

Conclusion

We examined the requests received in Perevirka from May 1 to July 31. We analyzed 289 disinformation messages out of 2,555 fake news and 1,148 manipulations detected by fact-checkers during this period.

Therefore, we selected 24 narratives and divided them into three groups based on the number of unique fakes and manipulations. We also highlighted the 4 most widespread narratives, which appeared most often in the information field during the entire studied period.

Having compared the waves of popular requests in the bot with search queries in Google, we presume that the Perevirka data illustrates the waves of disinformation in Ukraine.

All information provided in the document may be found in our Dataset.

The research is conducted by Oleksander Tolmachov, Yuliana Topolnyk, Alesya Yashchenko, Tetiana Kraynikova, Serhiy Prokopenko, Dasha Lobanok